Micron Stock Price Forecast: Is NASDAQ:MU Near $363 Still Early In The AI Run?

MU jumps from $61.54 to about $362.75 as AI-driven HBM/DRAM and NAND price spikes, projected EPS near $41.40, 45% operating margins and insider buying around $336 keep Micron’s stock price forecast tilted toward further upside | That's TradingNEWS

NASDAQ:MU – AI Memory Bottleneck Turns Micron Into A $400B Core Infrastructure Name

Price, Range And Valuation Snapshot For NASDAQ:MU

NASDAQ:MU trades around $362.75–$363.07, after a 7.76% jump from a previous close at $336.63. The last session’s intraday range ran from $352.04 to a fresh high of $365.81, effectively kissing the top of its 52-week band of $61.54–$365.81. Market value is now about $408.28 billion, with a trailing P/E ratio near 34.5x, a symbolic 0.13% dividend yield, and average daily volume around 28.7 million shares. A stock that traded near $60 a year ago now sits more than 5–6x higher, and over the past twelve months NASDAQ:MU has delivered roughly 200–250% gains, outpacing even aggressive AI peers. Despite that move, forward earnings projections – EPS around $41.40 in roughly 18 months – imply a much lower forward multiple than the current trailing P/E suggests, which is exactly why multiple independent frameworks still justify upside.

AI Demand, DRAM Pricing Power And The HBM Bottleneck For NASDAQ:MU

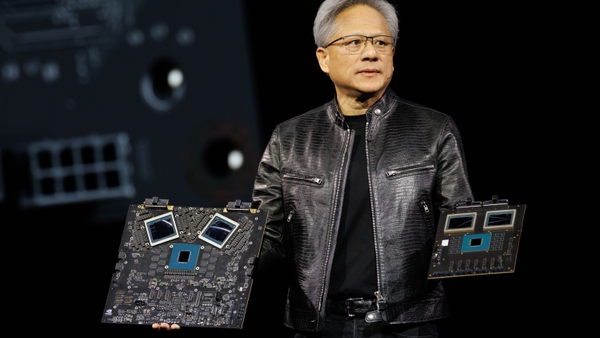

The re-rating of NASDAQ:MU is rooted in the AI memory super-cycle. Micron sits in a tight oligopoly: one of only a few global suppliers of high-bandwidth memory used beside GPUs and custom accelerators in cutting-edge AI systems. Training and inference on large language models are increasingly memory-bound, not compute-bound. Parameters, activations and intermediate states must be stored and accessed at extremely high bandwidths. As model sizes and context windows grow, memory requirements often scale faster than compute. That shift has turned HBM and advanced DRAM into the bottleneck, not the GPU silicon itself. This is exactly where Micron’s HBM and DRAM portfolio is concentrated.

Pricing data confirm how distorted the market has become. Industry DRAM average selling prices jumped roughly 40% quarter-on-quarter in the December period, an extraordinary move for a segment that used to be treated like a commodity. At the same time, major OEMs such as HPE and Dell are preparing customers for further DRAM price hikes as they pass through supply-side pressure. Rivals Samsung and SK hynix are reported to be considering server DRAM increases of up to 70% in the current quarter, which, if implemented, would lock in a structurally higher pricing plateau. Micron management has already described the gap between demand and supply for DRAM, including HBM, as the largest they have ever seen. With 2024 and 2025 HBM output essentially sold out and 2026 still tight, NASDAQ:MU is positioned to monetise scarcity through both volume and price.

Strategically, Micron is reinforcing this leverage with a dual-track HBM4E roadmap that offers both standard and custom memory stacks. AI infrastructure buyers are no longer only deploying merchant GPUs; they are designing LPUs, TPUs and application-specific accelerators where memory layout is fundamental to performance. Being able to match specific HBM configurations to each architecture strengthens Micron’s role in future design wins and makes the NASDAQ:MU multiple less about one cycle and more about persistent infrastructure relevance.

NAND, SSDs And Edge AI – The Second Growth Leg Under NASDAQ:MU

While HBM headlines dominate, NAND is becoming a second, under-priced driver for NASDAQ:MU. Micron’s data-center NAND portfolio already exceeded $1 billion in sales in a single fiscal quarter, powered by its ninth-generation G9 NAND and Gen6 SSDs such as the 9650, which are tuned for high-throughput storage in AI data centers. NAND historically lagged DRAM in AI workloads due to higher latency, but as inference workloads scale and cost per token becomes a board-level metric, cheaper non-volatile storage matters.

In the December quarter, NAND prices jumped roughly 20% sequentially, and industry expectations point to an average smartphone NAND price rise of up to 40% over the year as AI-driven handset upgrades collide with constrained supply. AI PC shipments are projected to grow about 52% year-on-year in 2026, with confirmed PC memory price increases near 20% in some channels. This flows directly into bit-demand growth for NAND and keeps the pricing environment tight.

Micron is positioned on both the data-center and client side. In the data center, its G9 NAND underpins high-capacity SSDs optimized for heavy AI I/O patterns. On the edge, its QLC-based 122TB and 245TB drives target dense storage, while the G9 QLC-based 3610 client SSD delivers up to 4TB capacity with more than 40% performance-per-watt improvement. Designed for ultrathin laptops and fanless systems, this device uses high-density 3D NAND stacking to pack more capacity into a smaller footprint. QLC traditionally trades latency and endurance for density and cost, but Micron’s client portfolio is tuned to recover performance while maintaining the economic advantage, which is exactly what AI-capable PCs and edge devices need.

The net result is that both DRAM and NAND are now participating in the AI upgrade cycle. That compounds the earnings leverage for NASDAQ:MU and means investors are not just betting on one HBM line; they are exposed to a broad AI memory stack from the cloud to the endpoint.

Revenue, Margins And EPS Trajectory Behind NASDAQ:MU

The financials justify the market’s shift in how it values NASDAQ:MU. In the first quarter of fiscal 2025, Micron reported about $8.7 billion in revenue and roughly $5.3 billion in cost of goods sold, leaving around $3.3 billion in gross profit and operating margins in the mid-20% area, near 25%. One year later, fiscal 2026 first-quarter revenue rose to approximately $13.6 billion, an increase of roughly $5 billion, while the cost base moved only marginally. With ASPs for DRAM and NAND spiking, every incremental dollar of revenue carries high contribution margin. Operating margins responded by jumping to about 45%, an improvement of roughly 20 percentage points in twelve months, something you almost never see in a mature semiconductor name.

Earnings followed the same arc. EPS surged about 538% in 2025, and analyst models now project EPS growth of roughly 293% in fiscal 2026 and around 27% in 2027, taking earnings per share toward $41.40 within roughly a year and a half if Micron delivers. On the top line, some detailed models now project fiscal 2026 revenue near $75.6 billion, a 102% year-on-year increase, followed by a four-year revenue compound annual growth rate close to 9% through 2030 as DRAM and NAND markets normalise but AI demand remains structurally higher.

From a cash-flow perspective, this growth comes with classic semiconductor cyclicality, but the mix shift toward HBM and AI-linked NAND means a larger share of Micron’s revenue is attached to secular workloads rather than one-off PC or smartphone cycles. That mix shift is what is raising the floor under NASDAQ:MU earnings across future downturns, even if peak margins eventually compress from current extremes.

Read More

-

TSM Stock Price Forecast - TSM Near $340 Aims For $461–$475 As AI Demand And 62% Margins Redraw Valuation

19.01.2026 · TradingNEWS ArchiveStocks

-

XRP Price Forecast - XRP-USD Near $2 After $1.84 Flash Crash As Tariffs And Fed Chaos Hammer Ripple

19.01.2026 · TradingNEWS ArchiveCrypto

-

Oil Price Forecast: Why WTI Near $59 Faces A $50–$70 Tug Of War

19.01.2026 · TradingNEWS ArchiveCommodities

-

Stock Market Today - US Futures Tumble on Tariff Shock as Gold Blasts to $4,689

19.01.2026 · TradingNEWS ArchiveMarkets

-

GBP/USD Price Forecast - Pound Holds Around 1.34 As Tariff Shock And UK Inflation Twist Cable’s Next Move

19.01.2026 · TradingNEWS ArchiveForex

Valuation Frameworks, Targets And Relative Positioning Of NASDAQ:MU

On simple multiples, NASDAQ:MU at around $362–$363 trades at roughly 34–35x trailing earnings. Historically, Micron’s long-run average P/E has been closer to 17x, so on the surface the stock looks rich versus its own history. The difference is that those historical multiples were attached to a company whose earnings constantly collapsed in DRAM/NAND busts. Today’s multiples are being applied to a company with AI-driven secular demand, expanding margins and a credible path to multi-year EPS growth.

A discounted cash-flow model that assumes revenue of about $75.6 billion in FY 2026, a four-year revenue CAGR near 9% through 2030, and a terminal value around $488 billion using a 1.5% perpetual growth rate and a 9.6% weighted average cost of capital generates a base-case equity value implying a per-share price near $390. That represents roughly 10.5% upside from the $353 opening price referenced when the target was set and still offers room above the current $362–$363 level if execution is on track.

A more aggressive relative valuation overlay, using forward EPS estimates around $41.40 and applying a 17x–20x multiple – broadly in line with or slightly above Micron’s historical range but far below Nvidia’s peak triple-digit P/E – produces fair-value bands that cluster closer to $600 per share. That is why some high-conviction AI-memory bulls argue NASDAQ:MU could still have room to almost double again over the next cycle if earnings materialise as projected and the market sustains a premium memory multiple.

Supporting that view, quantitative factor models give Micron strong scores: Street consensus classifies the stock as a Buy with an average rating around 3.8–4.4 out of 5, and algorithmic systems characterise it as a Strong Buy with scores near 5.0, while short interest sits at only about 2.7% of float. The positioning shows little evidence of aggressive structural bearishness even after a multi-hundred-percent rally, which tells you how much respect the AI memory thesis now commands.

NASDAQ:MU Versus Nvidia 2023 – Similar Growth Arc, Different Risk Profile

The analogy many analysts reach for is straightforward: NASDAQ:MU today looks like Nvidia in early 2023, when the market first started to recognize the scale of the AI compute boom. Nvidia’s stock rallied more than 400% off the lows, then paused in a consolidation band while P/E spiked above 100x, only to double and then double again as earnings caught up and then overshot.

Micron’s situation is different in two crucial respects. First, memory is more oligopolistic and more capital-intensive than GPUs. DRAM and NAND fabs require enormous scale, precision and years of capex. That means supply cannot be ramped quickly just because prices rise, which slows the classic boom-and-bust response and extends the period of tightness. Second, Micron is still trading at around 30–35x earnings, with forward PEG ratios below 1.0, unlike Nvidia which spent a long stretch priced at triple-digit multiples before earnings normalised those ratios. If EPS does quadruple and then grow another 20%+ in the following year, Micron’s forward P/E compresses dramatically even without further price gains.

The common element with Nvidia is the link to AI infrastructure. Nvidia monetised the compute side of the stack; NASDAQ:MU sits on the memory side, where every leap in model size and deployment scale multiplies memory requirements. As Google engineers and other hyperscalers have pointed out, inference is sequential and heavily memory-bound, meaning that without sufficient HBM and DRAM, the most advanced GPUs sit under-utilised. That systemic dependency is what underpins the argument that Micron is in the midst of a multi-year, not one-quarter, repricing.

Insider Transactions, Capital Allocation And Signalling For NASDAQ:MU

Price action has been reinforced by insider behaviour. Director Teyin Liu recently filed a Form 4 showing the purchase of 23,200 shares of NASDAQ:MU at prices between roughly $336.63 and $337.50. That is a multi-million-dollar personal allocation into a stock that has already more than tripled, not a token gesture. For investors tracking management confidence, that kind of open-market buying is a clean signal that insiders see more upside and are willing to add exposure at elevated levels.

For deeper tracking of board and executive dealing, investors can monitor Micron insider activity and the broader Micron stock profile, which aggregate Form 4 filings and institutional positioning. Combined with a 0.13% dividend that is likely to creep higher but will not be the core return driver, insider purchases tell you management prefers to reinvest heavily in capacity and technology and let shareholders participate primarily via capital gains, not yield.

Key Risks Around NASDAQ:MU – Cycles, Capex, Competition And AI Demand

Despite the AI-driven tailwinds, NASDAQ:MU still carries real risk. Memory remains one of the most cyclical corners of semiconductors. DRAM/NAND cycles have historically produced brutal price collapses once supply catches up and overshoots. Even with AI demand, models already show earnings decelerating after 2027 as new fabs in Idaho, Singapore and other regions enter full production and normalise the supply-demand balance. If those capacity additions arrive just as AI spend pauses or slows, the downside volatility in ASPs and margins will be significant.

Capex is the second pressure point. Micron is in the middle of a heavy investment phase, building and upgrading fabs to handle advanced HBM, DRAM and NAND nodes. Those projects consume billions of dollars and can drag on free cash flow in the short term. If the spend runs ahead of realised demand, returns on invested capital could undershoot the aggressive DCF assumptions that justify $390–$600 share-price targets.

Competition also matters. Samsung and SK hynix are not passive. SK hynix already holds a dominant position in HBM used alongside Nvidia GPUs, and Samsung has both scale and diversification. If either competitor executes more effectively on next-generation nodes or secures more of the incremental AI design wins, Micron’s share of the value pool could be smaller than the most optimistic models imply.

Finally, there is macro and AI-cycle risk. The bullish case for NASDAQ:MU assumes that AI investment continues at a high pace, that AI PCs and smartphones deliver the 52% shipment growth and 20–40% price uplift embedded in NAND forecasts, and that enterprises proceed with planned model deployments. Any slowdown in AI adoption, either because of economic weakness or because ROI disappoints, would pull forward the cyclical downswing in memory and expose the stock to a sharp de-rating from the current $360+ zone.

Investment View On NASDAQ:MU – Bullish, With A Clear Buy Stance

Pulling everything together, NASDAQ:MU is no longer being valued as a standard DRAM name trading at single-digit multiples through violent cycles. At around $362–$363, the company commands a $408 billion market cap, a trailing 34–35x P/E and sits at the top of its $61.54–$365.81 yearly range. The fundamentals justify that shift. Revenue has moved from $8.7 billion to about $13.6 billion in a year, operating margins have expanded from roughly 25% to about 45%, EPS is on track to grow by 200–300% in the next two fiscal years, and both DRAM and NAND are benefitting from AI-driven volume and pricing tailwinds. Detailed models reasonably support a $390 base-case and a more aggressive path toward $600 if earnings track the $41.40 per-share trajectory and the market sustains a mid-teens to high-teens multiple.

Given the current price near $362–$363, the structure of AI-memory demand, the tight oligopoly in HBM and advanced DRAM/NAND, and the clear insider conviction, the stock still screens as Bulish and a Buy, not a hold or a fade. The risk profile is real – cyclicality, capex and AI adoption remain the pressure points – but the numbers in front of investors right now argue that NASDAQ:MU is still in the middle of a historic AI memory repricing, not at the end of it.