Micron Stock Price Forecast: NASDAQ:MU Near $400 With AI Memory Boom Pointing to $450–$500 Upside

MU at $399.65 vs $412.43 high, with record growth, sold-out AI memory, new Taiwan fab and big insider buying, points to a higher Micron stock price target ahead | That's TradingNEWS

Micron Technology (NASDAQ:MU) – AI memory engine repriced at $399

Headline numbers for NASDAQ:MU after the Q1 2026 earnings reset

NASDAQ:MU is trading around $399.65, after printing an intraday high of $412.43 and a 52-week range of $61.54–$412.43. That is a 264% move in twelve months and roughly 44% in the last month, with a market cap near $450 billion, a trailing P/E of about 38 and a token dividend yield of 0.12%. Those multiples only make sense because the earnings base has completely reset. Latest reported quarterly revenue came in around $13.6–$13.64 billion, up roughly 56–57% year-on-year. Net income jumped to about $5.24 billion, with adjusted EPS in the $4.60–$4.78 band, representing roughly 167–175% EPS growth versus the prior year. Free cash flow flipped from barely positive a year earlier to more than $3 billion in the quarter. At the same time, Micron beat revenue expectations by about $760 million and EPS expectations by roughly $0.80, which is not incremental outperformance; it is a step-change in profitability. Company-wide gross margin expanded by roughly 17.3 percentage points, and operating margin expanded by about 19.5 percentage points, moving net margin into the high-30s. You are not looking at a mild recovery from a memory downturn; you are looking at a new earnings regime built on structurally tighter supply and AI-driven demand.

Revenue mix and where NASDAQ:MU is really making its money

Approximately 79% of Micron’s revenue in the quarter came from DRAM, with that segment growing about 69% year-on-year. NAND and storage products filled in the rest, also up strongly, but DRAM and high-bandwidth memory are clearly the core of the story. The Cloud / data-center business unit is now the main growth engine, riding hyperscaler spend on AI training and inference clusters that are saturated with HBM and high-capacity server DRAM. Client and mobile memory are benefiting from AI-PC and flagship smartphone cycles, but they are no longer the dominant driver. The Automotive and Embedded Business Unit, while still the smallest line, delivered year-on-year revenue growth close to 50% and gross margins around 45%, helped by ADAS, infotainment and early autonomous platforms that are hungry for both DRAM and NAND. Critically, Micron has already exited its lower-margin Crucial consumer RAM/SSD brand, which means the mix is shifting structurally away from price-sensitive, promotional consumer channels and toward contracted, long-cycle infrastructure buyers.

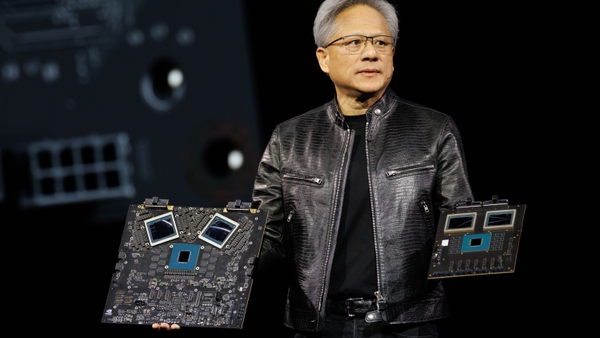

AI capex supercycle turns Micron into core infrastructure

The fundamental backdrop is simple: generative AI and large model inference have turned memory from a commodity input for gadgets into a strategic bottleneck for data centers. Public capex estimates for AI infrastructure around 2026 sit in the ~$527 billion range, and a large fraction of that is going directly into AI accelerators, servers and supporting infrastructure. Of that accelerator spend, roughly two-thirds of the bill of materials is memory and advanced packaging. Under conservative assumptions that 30–40% of AI capex is going to GPUs and accelerators, and about 66% of that figure is effectively memory, you are looking at an AI-linked memory TAM that lands in the ~$100–140 billion band for 2026 alone. Micron is one of a handful of suppliers capable of delivering HBM and leading-edge DRAM at the volumes and specs required by top-tier GPUs. That moves NASDAQ:MU out of the old smartphone/PC cycle and into the center of the AI compute stack for at least the next several years.

From commodity cycles to structurally tighter DRAM and HBM markets

Historically, memory was a textbook boom-bust industry: demand driven by consumer PCs, phones and consoles; supply expansions timed badly; and each capex cycle ending with price collapses and losses. The data you provided shows why that playbook is breaking. Demand is now anchored by AI data centers and long-term hyperscaler roadmaps, not by holiday sales of laptops. High-bandwidth memory is tightly co-designed with GPU packaging and qualified per generation; it cannot be dumped into secondary channels when demand rolls. Micron’s exit from consumer-facing Crucial products is a direct acknowledgment that corporate capital needs to be focused on AI, cloud, and automotive, where demand is less discretionary and product differentiation is real. At the same time, competitors are not flooding the market with unconstrained capacity. Instead, you see multi-year investment programs staged over several cycles, and you see explicit commentary from the industry that AI-grade memory is sold out well into 2026. The result is structurally tighter supply, firmer pricing and less amplitude in the classic DRAM bust pattern, at least while AI build-outs dominate the demand side.

Pricing power: DRAM and HBM shortages, sold-out AI capacity and Q2 guidance

The current guidance confirms that Micron is not only volume-driven; it is pricing-driven. One large sell-side analyst raised their price target to $330 with an “outperform” stance, explicitly calling for DRAM prices to rise 20–25% sequentially in Q2 and to continue increasing through 2026 as AI data-center demand outruns supply. Micron’s own Q2 outlook is more aggressive than the external model. At a midpoint revenue guide of about $18.7 billion, year-on-year growth would jump to roughly 132%, accelerating sharply from the 57% already printed. Gross margin is guided around 67–68%, which represents more than 30 percentage points of expansion versus the prior year. Operating expenses are forecast around $1.38 billion, up about 32% year-on-year, far below the revenue growth rate, so incremental margins remain extremely high. EPS guidance around $8.19–$8.42 implies about 440% year-on-year earnings growth. On top of that, management and industry commentary indicate that AI-oriented memory – especially HBM and certain high-capacity server DRAM lines – is effectively sold out for 2026. That combination of locked-in volumes, rising prices and leveraged margins is exactly why you are seeing the share price and earnings move in tandem.

Capacity build-out: Taiwan fab deal, $100B New York megaproject and execution risk

To support this environment, Micron is executing a two-track capacity strategy. Long term, the company is pushing ahead with a U.S. megaproject in New York, with a total investment envelope up to $100 billion over many years. That site is expected to become operational around 2030 and is designed to secure domestic production capacity for advanced memory nodes under a friendlier policy regime. This does nothing to flood the current cycle; it anchors the next one. Closer in, Micron has closed a US$1.8 billion acquisition of a fab in Taiwan from Powerchip Semiconductor Manufacturing. That plant is expected to start shipping significant DRAM wafer volume from the second half of calendar 2027, directly addressing the shortages we are seeing in high-performance memory today. Combined with existing fabs in the U.S. and Asia, this gives Micron a more diversified geographic footprint and better leverage with large customers that care about supply chain resilience. The flip side is execution risk: these are heavy, multi-year capex commitments that assume AI demand remains strong, pricing stays rational, and governments continue to support local manufacturing with subsidies and tax credits. Any slowdown in AI capex or mis-timed capacity ramp can compress margins quickly if the market turns.

Read More

-

SHLD ETF Price Near Record $78.47 as Defense Tech ETF Rides $3.6T Arms Supercycle

25.01.2026 · TradingNEWS ArchiveStocks

-

XRP ETF Warning: XRPI And XRPR Under Pressure As XRP-USD Battles The $2 Line

25.01.2026 · TradingNEWS ArchiveCrypto

-

Natural Gas Price Explodes Above $5 As Winter Storm Fern Rewrites The Winter Outlook

25.01.2026 · TradingNEWS ArchiveCommodities

-

USD/JPY Price Forecast - USDJPY=X Price Dips Toward 155.7 As Rate Checks And BoJ Hawkish Turn Put 160 Ceiling At Risk

25.01.2026 · TradingNEWS ArchiveForex

Technology edge: HBM4, 1-gamma DRAM and automotive-grade memory leadership

Micron’s technological positioning is now a material part of the thesis, not an afterthought. In high-bandwidth memory, the company has moved from about 10% share in 2022 to roughly 21% by mid-2025, overtaking one major Korean competitor and closing the gap on the current leader around 62%. HBM4 samples are quoted at pin speeds around 11 Gbps, above reported 10 Gbps levels from key rivals, indicating that Micron is at or near the front of the performance curve. On the DRAM side, the 1-gamma node reached mature yields about 50% faster than the previous node, delivering more than 20% lower power density and over 30% higher bit density. Next-generation 1-delta and 1-epsilon developments are already underway, keeping the roadmap viable for both servers and mobile. That performance and efficiency profile is exactly what hyperscalers and GPU vendors need as logic performance has scaled ~475x since 2016 while memory bandwidth has lagged at ~11x growth, creating a “memory wall” that only advanced DRAM and HBM can alleviate.

Automotive and embedded: underpriced growth driver for NASDAQ:MU

Automotive and embedded systems are still a minority share of NASDAQ:MU revenue but they are strategically important. The segment grew about 48.5% year-on-year in Q3 2025 and expanded gross profit by roughly 125% to margins near 45%. Vehicle memory footprints are rising from roughly 90 GB of combined DRAM and NAND today toward ~278 GB around 2026, and up to multiple terabytes in high-end autonomous platforms by 2030. Micron estimates aggregate memory bandwidth needs in fully autonomous vehicles exceeding 1 terabit per second. Micron is also the first and so far only supplier with ASIL-D certified LPDDR5X DRAM, delivering 15–25% more bandwidth than the previous generation, 10% lower power consumption and expanded addressable memory space. That safety certification and technical edge matter as robotaxis and advanced driver assistance roll out at scale. Strategically, this segment resembles what AI GPUs were for NVIDIA a decade ago: a niche that becomes mainstream and re-rates the entire business once adoption ramps.

Insider conviction and external opinions around NASDAQ:MU valuation

Insider behavior supports the bullish case despite the run. Board member Teyin Liu recently bought 23,200 shares of Micron for roughly $7.8 million, a direct, size-able purchase in the open market that you can track under the insider activity section here: MU insider transactions. This is not stock-based compensation; it is cash being put to work at roughly current prices, which is a strong signal that at least one director believes the upside is far from exhausted. External opinion is more mixed on valuation levels but uniformly positive on fundamentals. One major analyst boosted their target to $330 after Q1, focusing on the 56% revenue growth to $13.6 billion, EPS expansion to $4.60, and the expectation that DRAM prices could rise 20–25% sequentially in Q2 and continue trending higher through 2026 as AI demand “balloons” while supply takes time to catch up. Another detailed valuation framework puts a one-year target around $739.20 based on applying a peer-group forward P/E of about 22x to Micron’s projected earnings, arguing that margin expansion, HBM market-share gains and a sector re-rating justify a premium to current forward multiples. On the quantitative side, Micron shows up with a forward PEG near 0.22x, dramatically below a sector median around 1.6x, which is exactly what a high-growth but still under-re-rated name looks like.

Valuation framework: earnings power, multiples and the AI scenario band

At about $399.65 per share, NASDAQ:MU trades around 38x trailing earnings, but the trailing denominator is already stale relative to the forward curve. Using the company’s own midpoint guidance of roughly $8.19–$8.42 EPS for Q2 alone, it is straightforward to see annualized earnings power that could justify a forward P/E in the low double digits if the run-rate holds. Even after the rerating, some screens still show forward multiples in the 11–12x range based on aggressive EPS expansion, which is why you see comments that MU trades at a ~53% discount to the broader IT sector on non-GAAP forward P/E. At the same time, more conservative valuation models like the one you cited flag Micron as trading more than 100% above “fair value” based on a slower growth trajectory and a standard DCF, and note that the current P/E is not far below the semiconductor industry average near 40x. The reality is that the stock is now priced as an AI core infrastructure asset, not a traditional DRAM cyclical. If AI capex holds anywhere close to current forecasts and Micron executes on its capacity and technology roadmap, earnings power supports substantially higher prices than $400. If AI capex normalizes sharply, or if HBM pricing cracks, the multiple compresses and you will feel the downside with leverage.

Risk map: what can break the NASDAQ:MU bull case from here

The key risks are clear. First, competitive pressure from SK Hynix and Samsung is real; both have deep balance sheets, huge fabs, and the ability to push capacity and pricing aggressively if they choose. Chinese entrants are trying to break into the memory market and could add supply and geopolitical noise over the medium term. Second, AI demand is not guaranteed to grow in a straight line. Industry leaders have already hinted at the possibility of an AI “bubble” in funding and infrastructure, and models like DeepSeek show that there are paths to more compute-efficient AI that require fewer accelerators and potentially less DRAM per unit of useful work. Any pause in hyperscaler capex, or a shift toward more efficient architectures, would hit unit demand and could pressure pricing. Third, Micron’s own capex program is front-loaded: the Taiwan fab must ramp on time and on budget; the New York megaproject must ultimately reach high utilization; and mis-timing either into a softer demand environment would crush marginal returns. Finally, the stock has already moved 264% in a year and 40% in a month; position-size and volatility risk matter even if the long-term thesis is intact.

Buy, sell or hold NASDAQ:MU at $399? Clear stance based on the numbers

On the facts you provided, Micron has moved from being a volatile, consumer-linked memory supplier to a central supplier of AI infrastructure, with DRAM and HBM shortages, record revenue of ~$13.6 billion per quarter, net margins near 38%, guided EPS growth above 400% year-on-year, AI-grade memory effectively sold out for 2026, a US$1.8 billion Taiwan fab to add DRAM wafers from 2027, a $100 billion New York project for the next decade, HBM4 and 1-gamma DRAM at the leading edge, automotive and embedded memory compounding at ~50% with 45% gross margins, insiders like Teyin Liu buying ~$7.8 million of stock, and forward PEG ratios near 0.2x. The risks are real – competition, AI demand normalization, heavy capex – and the move in the share price has been violent, so you should expect deep drawdowns if sentiment turns. But on a pure risk-reward basis, with this earnings profile and this positioning in the AI stack, I rate NASDAQ:MU a Buy at current levels, skewed toward long-term holders who can tolerate volatility and are willing to own a core AI memory asset rather than try to trade every swing.