NVIDIA Stock Price Forecast - NVDA at $192: Can AI Factories Outrun China’s H200 Roadblock?

With NVDA near $192, a $4.7T valuation and a 52-week range of $86–$212, investors weigh surging AI-data-center capex, China’s H200 blockade and Street targets pushing the stock toward the $250+ zone | That's TradingNEWS

NVIDIA (NASDAQ:NVDA) – AI FACTORY GIANT AROUND $192

Big Picture: Where NASDAQ:NVDA Trades Now And What The Market Is Pricing In

NVIDIA (NASDAQ:NVDA) sits around $192 per share, with the latest session printing a range of roughly $188–$194 and a 52-week band of $86.63–$212.19, giving the company a market cap near $4.7 trillion and a trailing P/E close to the high-40s with a token 0.02% dividend yield.

Despite that size, the equity is still being treated as a structurally high-growth AI infrastructure name: revenue is running at roughly +65% YoY, free-cash-flow margins are exceptional, and Wall Street’s forward P/E near 41x bakes in years of elevated AI capex from hyperscalers, sovereigns and industrial buyers. The stock has slipped about 5% over the last three months, even as earnings expectations and AI-buildout headlines moved higher, which is why multiple independent analysts in your source set explicitly upgraded or reaffirmed Strong Buy ratings in January while the price drifted.

From GPU Vendor To Full-Stack AI Factory Platform

The Seeking Alpha pieces make one critical point very clear: the unit of competition has shifted from the single GPU to the full system. NVIDIA is no longer just a card supplier; it is the architect of entire AI factories.

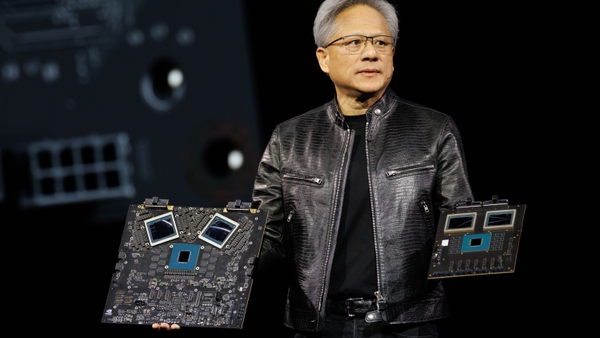

At CES, Jensen Huang emphasized that NVDA now “builds entire systems” and treats AI as a full stack. That means: accelerators, networking, interconnects, storage, software, orchestration and simulation all co-designed as one product. In that structure, the GPU becomes interchangeable at the margin; the value is created at the system level where the integration, software tooling and ecosystem lock-in live.

This is why customers are not simply buying H100, B100 or H200 units; they are buying tightly-integrated racks that behave as one compute organism. Once a hyperscaler or an enterprise standardizes its AI workflows, CUDA stack, networking layout and simulation pipelines on NVIDIA, swapping the GPU for a rival part is operationally expensive and strategically risky. That lock-in is central to the bull case at $192.

Vera Rubin, NVLink And The Economics Of Cost Per Intelligence

The Vera Rubin platform is the purest expression of this system-level strategy. It co-optimizes: GPUs, CPUs, NVLink interconnects, DPUs, memory hierarchy, networking fabric and simulation software so that each rack is treated as a single intelligent node.

Instead of chasing incremental benchmark wins on raw GPU power, the architecture is optimized for cost per unit of “intelligence” – how cheaply and efficiently you can train, fine-tune and deploy large models at scale. NVLink 6 and high-bandwidth fabrics dictate how efficiently compute scales across trays and racks; Spectrum-class networking controls latency and congestion as clusters grow; above that, CUDA and inference-optimization layers translate raw FLOPS into actual model throughput.

In that world, the GPU is necessary but not sufficient. The margin pool and the switching cost both migrate upwards into the full stack, which is exactly where NVDA is strongest and where rivals like AMD or in-house TPUs have the hardest time matching the breadth and maturity of the ecosystem.

From Generative AI To Physical AI: New Demand Beyond LLMs

Your sources hammer another transition: the next AI leg is not more chatbots; it is physical AI – robots, autonomous vehicles, industrial control systems and smart infrastructure operating in the real world.

NVIDIA’s stack is being positioned as the operating system for this phase. Omniverse-style simulation and synthetic-data generation feed Vera Rubin-class compute clusters, which train vision-language-action models and robotics policies. Those models then deploy into robots, AV fleets, factories and edge devices, with real-world telemetry looping back into the training pipeline.

This is structurally different from the 2023–2024 generative AI hype: once a robotaxi network, logistics operator or “lights-out” factory builds its systems on NVDA, the hardware, simulation, software and data flywheel are intertwined. That adds duration to the growth story and justifies using aggressive forward assumptions in the valuation at $192 even after the stock’s huge rerating from the $80–$90 zone over the last year.

China, H200 And The “New Boeing” Trade-War Parallel

The most controversial piece of the puzzle in your material is China and the H200 chip. Initially, the market treated Chinese demand as a major upside lever: reports pointed to around 400,000 H200 units cleared for top Chinese tech players at about $27,000 each, implying roughly $10–11 billion of near-term sales and a broader pipeline of more than $50 billion if the full reported 2 million-unit interest materialized.

However, the newer analysis makes it clear this is not unlocked. Chinese customs officials are reported to be blocking H200 shipments, forcing suppliers to pause production, and turning the chip into a bargaining tool in the wider US-China tech confrontation. The article explicitly compares NVDA’s H200 position to Boeing’s 737 MAX in China: a high-value US product that becomes a hostage to regulatory gatekeeping and geopolitical leverage.

Crucially, none of the serious work you shared assumes those China H200 sales in base-case guidance. They are treated as optional upside. For NVDA, the real risk is over-building H200 inventory in a context where global customers are rapidly pivoting to more advanced architectures like Blackwell and eventually Rubin. Management appears to be acting rationally by allowing H200 production to slow if China remains blocked, which caps inventory-write-off risk and prevents dead capital from bloating the balance sheet.

If, over time, Beijing and Washington find a durable structure for AI-chip exports and those H200 orders in the tens of billions unlock, that becomes a second-leg catalyst layered on top of already-strong US and allied-market AI demand. If not, the stock still rides the domestic and non-China capex wave.

Read More

-

Alphabet Google Stock Price Forecast - GOOGL Stock At $338: Cloud Margins And Quantum Re-Rate

30.01.2026 · TradingNEWS ArchiveStocks

-

XRP Price Forecast; XRP-USD Under Pressure At $1.75 As Bears Target $1.70 Support

30.01.2026 · TradingNEWS ArchiveCrypto

-

Oil Price Forecast: Trade Rich to a $60 Glut Anchor as Brent Breaks Above $70

30.01.2026 · TradingNEWS ArchiveCommodities

-

Stock Market Today: Dow, S&P 500 and Nasdaq Drop as Warsh Fed Pick Hits Gold

30.01.2026 · TradingNEWS ArchiveMarkets

-

GBP/USD Price Forecast; Pound Holds Above 1.37 As Four-Year High Near 1.3850 Keeps 1.40 In Sight

30.01.2026 · TradingNEWS ArchiveForex

US AI Capex And Why NVDA Is Still Supply-Constrained

On the demand side, the second NVDA article underlines how aggressive US AI spending is. Microsoft, Meta, Tesla and others are openly telegraphing 2026 as another heavy AI-infrastructure year, with double-digit-billion data-center budgets becoming the norm and total US AI capex for the decade clustering in the multi-trillion-dollar range when you layer in Amazon, Alphabet and major private players.

Even without China, NVIDIA is supply-constrained, not demand-constrained. The reported Chinese interest in over 2 million H200 units at around $27,000 each implies theoretical revenue of $54 billion; with a forward price-to-sales multiple in the low 20s, that single line of upside could mathematically support nearly $1.2 trillion of incremental market cap over several years. But the company does not need that to justify its current $4.7T size: US and non-China AI factories already soak up capacity, and customers like OpenAI are talking about potential $1 trillion infrastructure ambitions on their own.

This is why independent analysts in your sample can reasonably call NVDA “too cheap to stop buying” after a 5% pullback: the stock has moved sideways since late October while earnings estimates and capex signals have drifted higher, compressing the forward multiples.

Financial Firepower: Cash, FCF And R&D Muscle Versus AMD

The comparative analysis versus AMD in your material makes the capital-allocation gap explicit. NVIDIA’s cash pile is now above $60 billion, roughly 9x AMD’s, and trailing free cash flow is about 18x larger. That gives NVDA vastly more room to outspend on R&D and capex without stressing the balance sheet.

On combined R&D plus capex, NVIDIA already invests roughly 2.5–3x what AMD does, and it does so with a historical ROIC near 40% over the last decade, versus a single-digit average for AMD. That combination – larger scale, higher spending, and much more efficient incremental returns – is why the articles characterize NVIDIA’s moat as widening even as AMD’s hardware catches up in headline specs.

For valuation, the author’s work comparing forward P/E trajectories shows that between mid-November 2025 and late January 2026, forward P/E for FY2029 compressed to about 17x while China tailwinds, US capex and system-level dominance all became clearer. Relative to AMD, NVDA actually screens cheaper on P/E and price-to-FCF despite carrying higher price-to-sales ratios – a direct consequence of its superior profitability and capital efficiency.

Valuation, PEG And Where Targets Cluster Versus $192 Spot

One of the pieces explicitly builds a PEG-based target. With a PEG near 1.08 against a sector median around 1.63, NVDA screens as roughly 34% undervalued versus its peer-group growth profile. Applying that 34% to the $192 spot price drives a theoretical fair value around $257, which lines up closely with the average Wall Street target range quoted in the analysis.

A separate EV/EBITDA-based framework from The Aerospace Forum yields a low-end ~$201 price target (peer-group multiple on FY2027 earnings), a base case around $244 (FY2026), and an optimistic path to $300+ if you assume China normalizes and you use FY2028 numbers as the anchor. Under current information, the author explicitly flags the $300+ range as requiring positive China news, but the $200–$240 band is supported even with zero China contribution.

Put simply: at $192, you are paying below both the peer-relative PEG-implied value and the core EV/EBITDA-driven base case, while the company continues to grow revenue at 60%+ and stack free cash flow, with no net debt and the ability to build a $200B+ cash position by 2028 if current trends hold.

Risk Grid: China, Geopolitics, Memory, Competition And Multiple Compression

The main risks highlighted across the articles are clear and concrete. First, geopolitics: a serious escalation around Taiwan would directly threaten NVDA’s supply chain because foundry capacity is heavily concentrated in TSMC. Even if you view outright war as low probability, markets will continuously price some tail risk into the multiple given the scale of the impact.

Second, China trade and export controls: the back-and-forth around H200 shows how quickly upside can turn into political leverage. Tariff structures, export controls and customs gatekeeping can all cap or delay Chinese revenue even when chips are technically allowed.

Third, competition: AMD is already winning share in x86 CPUs and is pushing aggressively in AI accelerators. The historical chart you have – AMD moving desktop CPU share from ~9% to 33% in a decade while Intel fell from above 90% – is a reminder that “unassailable” moats can be eroded. If AMD ever manages to combine competitive accelerators with a stickier software ecosystem or if hyperscalers push successful in-house silicon, NVIDIA’s dominance could narrow.

Fourth, input cost and memory constraints: Micron’s comments about “unprecedented” HBM shortages and multi-billion-dollar fab investment plans highlight that memory availability can squeeze margin and limit shipment growth. Samsung’s HBM ramp may relieve some pressure, but investors should expect periods where memory pricing crimps data-center margins, even if NVDA manages to pass a portion of the inflation to customers.

Finally, valuation and sentiment: even with forward multiples compressing, you are still paying a premium for perfection at ~41x forward earnings. Any slowdown in AI capex, execution misstep, or major macro shock can trigger sharp multiple compression from these levels. That is a permanent feature of owning a mega-cap growth leader, not a bug.

Strategic Take At ~$192: How The Data Lines Up

Connecting all of the above: at roughly $192 per share, NASDAQ:NVDA is a high-growth AI infrastructure monopoly in practice, with: system-level dominance, a full-stack AI factory model, powerful optionality on physical AI, asymmetric upside if China normalizes, and a balance sheet that lets it massively out-invest rivals while still compounding at exceptional ROIC.

Your sources converge on the same point using different angles – PEG, EV/EBITDA, peer comparisons and cash-flow trajectories all cluster in a base-case value band above the current price, with upside scenarios stretching into the high-200s if structural AI tailwinds and China contributions both play out. The bear arguments are almost entirely about exogenous shocks (geopolitics, export controls, macro risk) and the normal dangers of a rich multiple, not about a broken business model.

Within that framework, the data you provided supports treating NVDA at $192 as still fundamentally aligned with a bullish, long-term Buy stance, with the understanding that volatility around China headlines, memory constraints and AI-capex sentiment is a feature of the trade, not an exception.